AI and machine learning have affected people in different aspects throughout the world. Machine learning has penetrated our lives in a very short time, and its effects are everlasting. However, the most neglected aspect of AI and machine learning is that it can be manipulated and there can be attacks on AI. Cyber attackers and hackers can misuse AI, and it is very vulnerable. There can be several reasons behind this vulnerability, including AI’s inherent limitations. A poor management system can also be a major threat to AI tech and users.

There can be scenarios in which your adversaries might get access to an AI system that may result in alterations to data. This type of issue can create havoc among the users. There are so many ways in which such attacks can happen.

Defining machine learning and AI

If we talk about machine learning, it means training data setups so that models trained can perform the tasks assigned.

However, AI comes with the ability to mimic the human mind to perform complicated problems. Artificial intelligence uses machine learning to do the tasks assigned to it.

How do attackers exploit vulnerabilities of AI models?

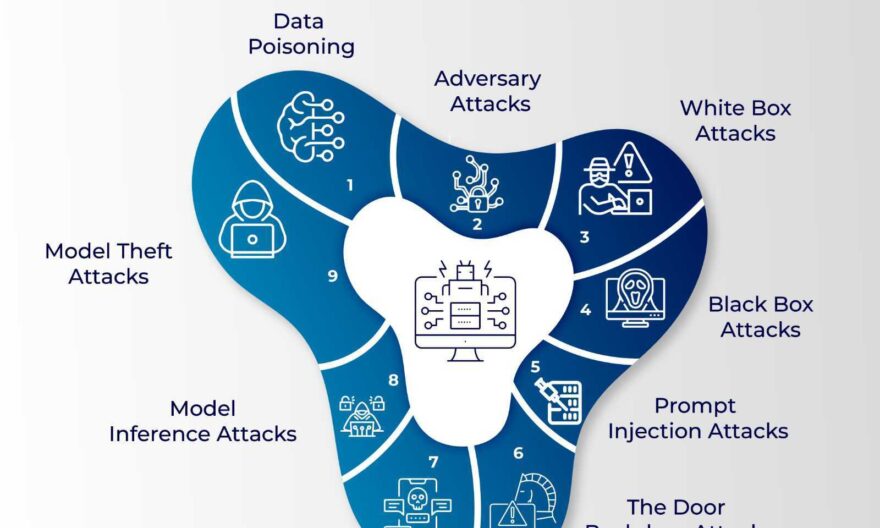

Attackers can use multiple techniques to target AI models, such as data poisoning, prompt injections, etc. Attackers often deceive the AI model to tamper with the outputs they provide.

Let’s learn about some common attacks that happen on AI.

Data Poisoning

These attacks usually happen in the training phase when the model is provided with malicious data so that the performance of AI gets compromised. This can be problematic and can make the whole model collapse.

Adversary attacks

There can be adversary attacks by providing the data setup with incorrect inputs so that it gives faulty results to the users. Everyone knows that AI systems work on previously seen data, and once someone manipulates the source data, you can’t do much about it.

These changes in the source data are not easy to tackle, and thus, AI systems are very vulnerable. Adversary attacks can be of two different types.

White box attacks

White box attacks are those cyber attacks on AI in which the attackers know about the ML model used to train that AI tool.

Black box attacks

In Black box attacks, the attackers are unknown to the machine learning model used for training. These attackers rely completely on the input and output results to remodel AI tools according to their agenda.

Prompt injection attacks

Prompt injection attacks are very similar to black box attacks. In these attacks, the vulnerability of AI and ML models towards prompts is used. The tool is injected with manipulated prompts.

This leads to inappropriate and false results that get stronger with more and more uses.

The backdoor attacks

These attacks are the most dangerous ways people can access and manipulate AI models. This is done by placing a code or altering the model differently. This method can help attackers and hackers steal important data and paralyze the system.

Another problematic aspect of this attack is that you can’t easily detect this or may take a long time to detect.

However, it’s not a cup of tea for anyone to do this attack on a model. One must have in-depth knowledge about the model they are about to attack.

Social engineering attacks

These attacks target human users as they use AI more and more. Using AI, the attackers manipulate the users to leak secret or private information. Attackers can exploit the same information for their benefit.

Model inference attacks

As we all know, AI has unlimited power in certain fields and can be fed with much more data than we can imagine. This data can be in emails, usernames, passwords, or other personal information or credentials. What if someone gets to all this info?

This is the case with model inference attacks. Attackers try to remove personal or private data from the AI model.

Model theft attacks

Model theft attacks are done to replicate or steal a well-trained AI model for malicious purposes. Once a model theft takes place, the attackers or hackers get access to the structure of your model, and it becomes easy for them to get into and reverse engineer it.

How can we prevent these attacks?

There are several ways to make AI models safe and prevent such attacks.

Have an idea of the recent developments

Hackers are always updating their ways to get into your AI systems; thus, you must keep yourself updated. Keep an eye on the internet and keep learning about making your model more and more secure.

Robust training

The best way to secure your AI model is by exposing it to similar adversarial threats during training. This way, your AI model can become less vulnerable to such attacks.

Strong Architecture

The vulnerability of your AI model also depends on the quality of the architecture used to build it. Ensure that your architecture for your AI is less vulnerable to cyber attacks.

Focusing on authentication and authorization

Make sure that you have robust authentication and authorization techniques to ensure that there is a negligible chance of getting into your system. One can use role-based access models and multi-factor authentication for this purpose.

Monitor activity

You must have an eye on the activity happening on the backside and front side of your AI section so that you can identify any potential threats.

Use audits

Go for regular audits to ensure the gaps and vulnerabilities arising daily are addressed. This step is very important as something that seems to be completely secure today may be vulnerable to the threats that are about to come.

Encryption is the key

Encrypting sensitive data makes it tough for attackers to intercept it. Whether your data is moving or is at rest encrypted, it can add a different level of security for your AI model.

Trusted Data Sources

While training your AI model, ensure that the data you feed it is from -reliable and trusted sources. One can also use techniques for filtering the data to ensure nothing malicious enters your AI system.

Conclusion

After reading about the attacks and ways to prevent them, owners must update their defense systems actively. There is no way to avoid such attacks other than proactive action and a robust defense mechanism. When you provide work-specific access to others on your model, people can’t access what they aren’t supposed to. This ensures that there’s no internal sabotage happening with your AI system.

If you want to get to the next level, collaborate with industry experts to have a wider outlook on such problems. An AI model takes a lot of hard work and patience to build, and without making it secure enough, you can’t assume it won’t be attacked.